SpringCloud-Sentinel

服务熔断与降级

Sentinel 熔断与降级

主要功能:实时监控、机器发现、规则配置

Sentinel控制台安装

Docker 镜像构造iexxk/dockerbuild-Sentinel

1 | #基础镜像选择alpine 小巧安全流行方便 |

部署

1 | #部署注意需要和其他服务部署到一个stack里面,不然8719是访问不了的 |

访问通过127.0.0.1:8718进行控制台的访问,默认用户名密码是sentinel/sentinel

主要功能:实时监控、机器发现、规则配置

1 | #基础镜像选择alpine 小巧安全流行方便 |

1 | #部署注意需要和其他服务部署到一个stack里面,不然8719是访问不了的 |

访问通过127.0.0.1:8718进行控制台的访问,默认用户名密码是sentinel/sentinel

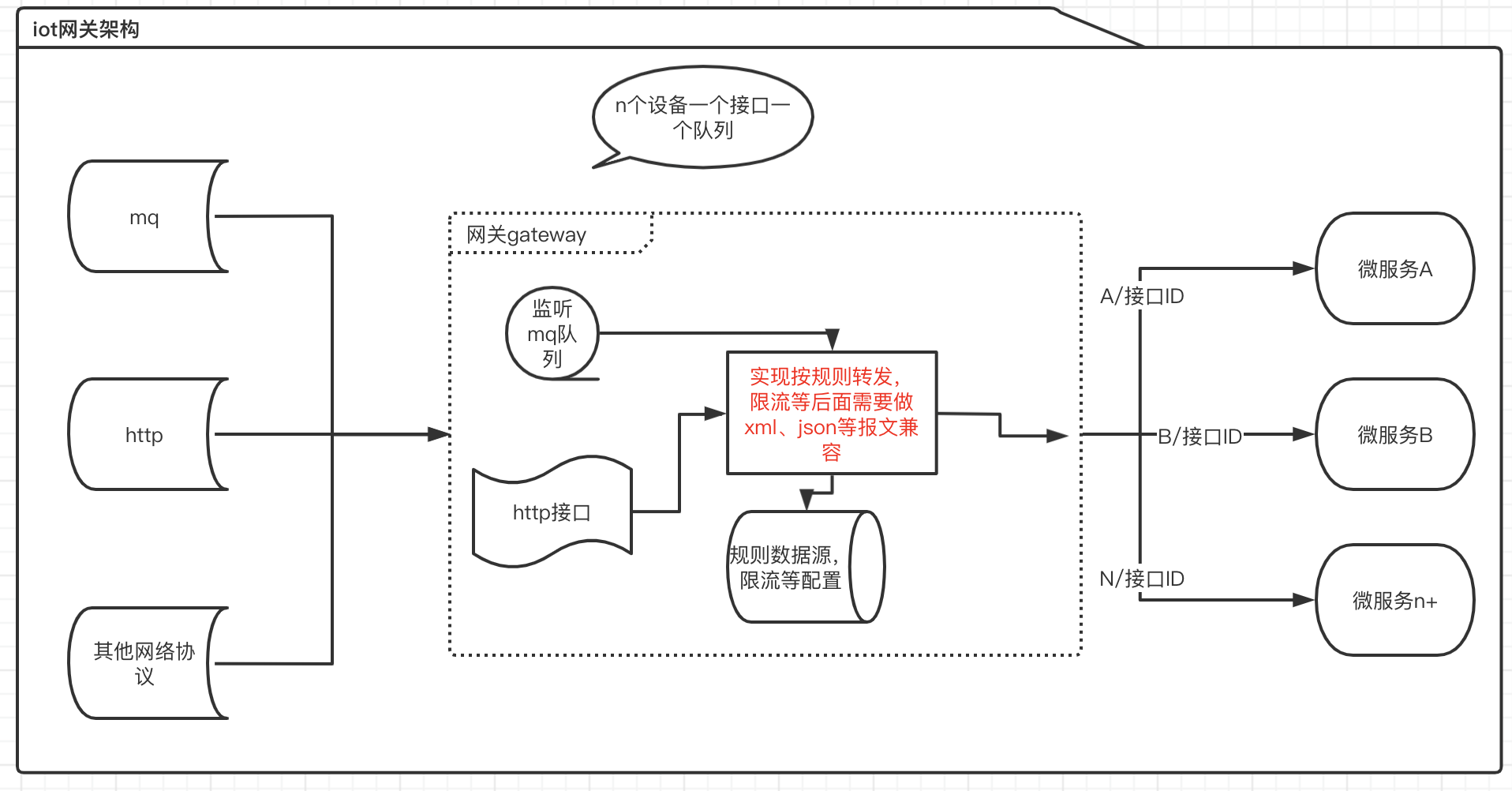

gateway:异步网关,读取body可以通过ReadBodyRoutePredicateFactory进行缓存

zuul:同步阻塞式网关,因此读取或修改body就比较简单

1 | - id: xkiot-cmdb |

需求,只需要读取校验签名,因此不需要修改body,因此采用缓存方案进行读取,关键类ReadBodyRoutePredicateFactory

在@Configuration的注解类里面添加该配置,或者新建个配置类,这里的bodyPredicate,会在第二部里面的yml的predicate进行关联

1 | /** |

首先加载ReadBodyRoutePredicateFactory类,也可以自定义重写该类,其他的修改body的类同理,加载需要在yml里面配置

1 | - id: xkiot-cmdb |

然后实现一个过滤器,用于接受body,以及对body进行校验等

1 | package com.xkiot.gateway.filter; |

引用第三步骤的过滤器

1 | filters: |

因为要做一个兼容多网络协议,多报文兼容的动态网关

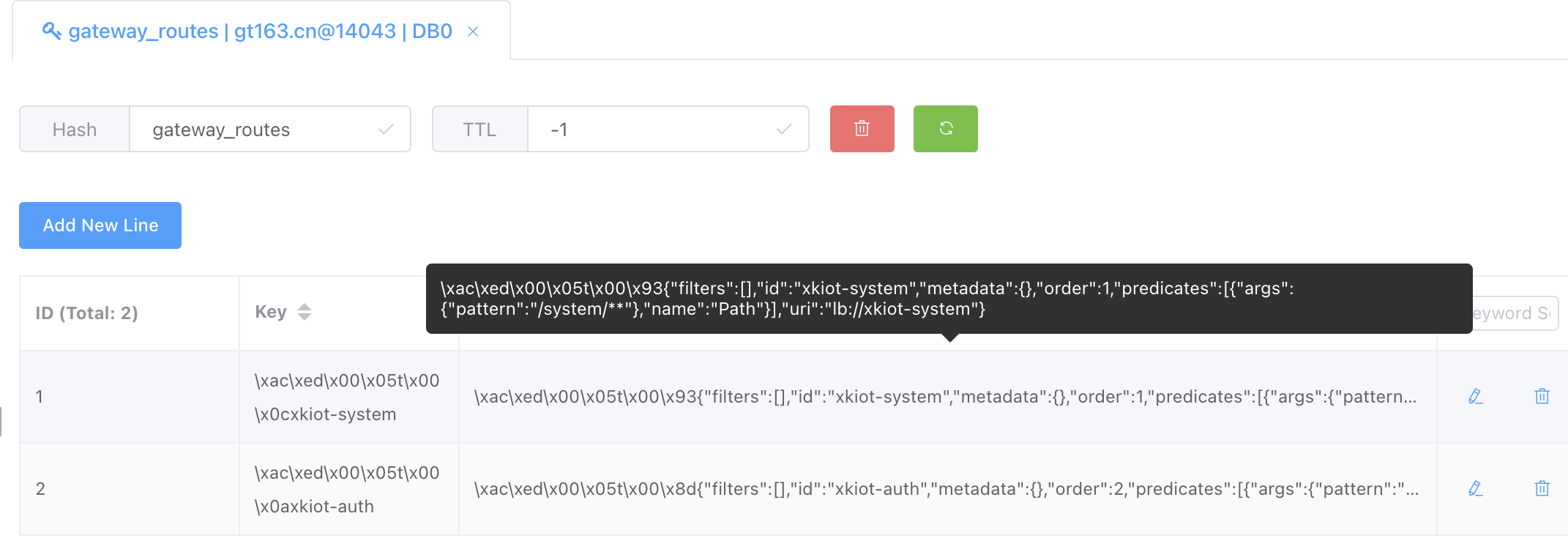

RouteDefinitionRepository 路由存储器用于存储路由规则的接口,通过实现它,可以进行自定义存储路由规则到不同的中间件(redis/db等)

实现三个方法

1 | @Component |

ApplicationEventPublisherAware事件发布接口1 | @Service |

然后添加一个设置接口

1 | @RestController |

测试发送路由配置添加请求{{gateway}}/route

json报文数据如下:

1 | [ |

其中order设置为0,代表不起用该路由配置,id代表服务id,uri代表微服务地址,predicates路由规则,对应的yml配置如下

1 | spring: |

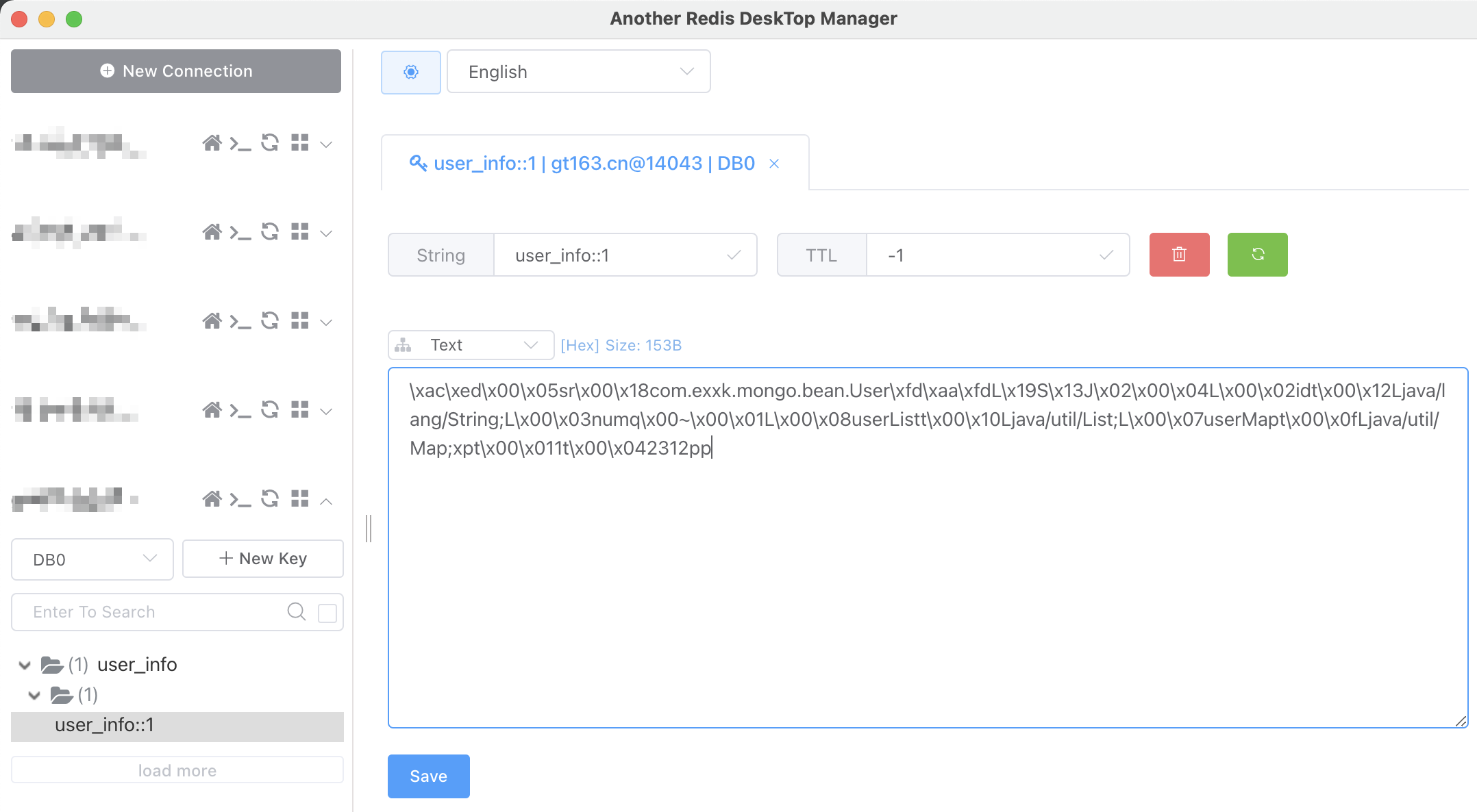

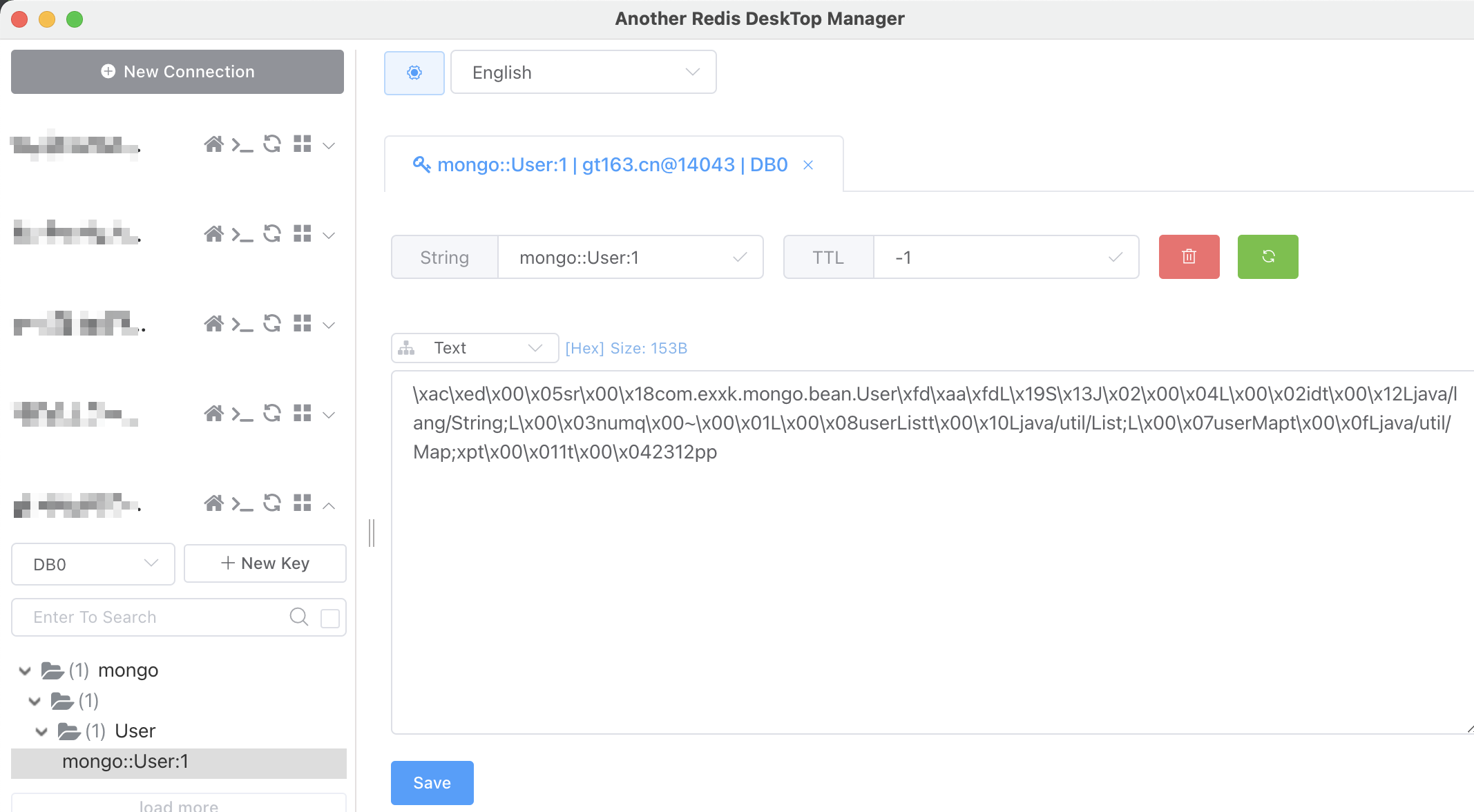

redis数据存储如下:

Nacos 致力于帮助您发现、配置和管理微服务。Nacos 提供了一组简单易用的特性集,帮助您快速实现动态服务发现、服务配置、服务元数据及流量管理。

主要作用替代spring cloud的注册中心和配置中心

依赖关系:nacos依赖与mysql的数据库(也可以是其他数据库)作为存储

访问:ip+端口,默认登陆用户名密码为nacos/nacos

1 | version: '3' |

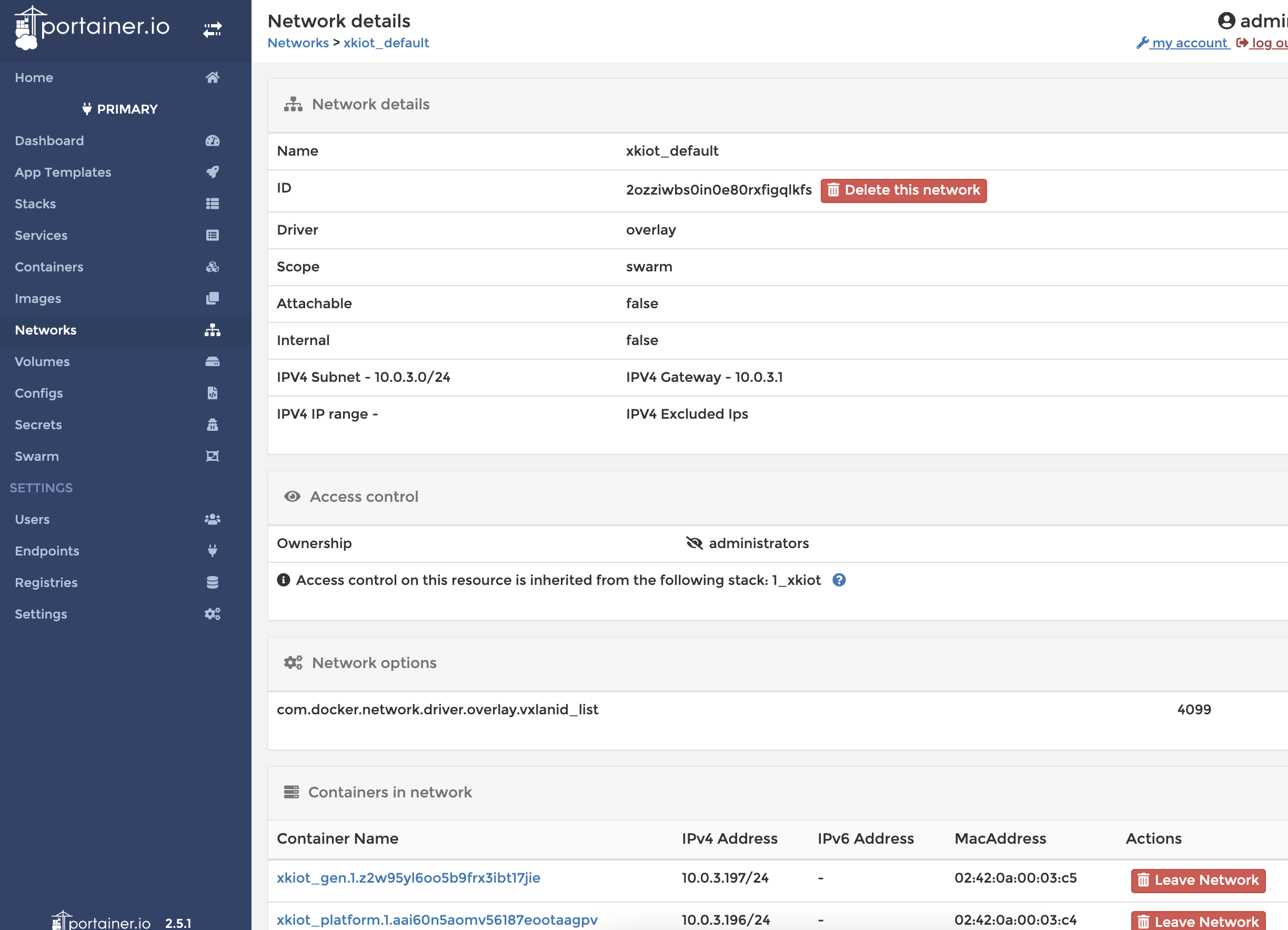

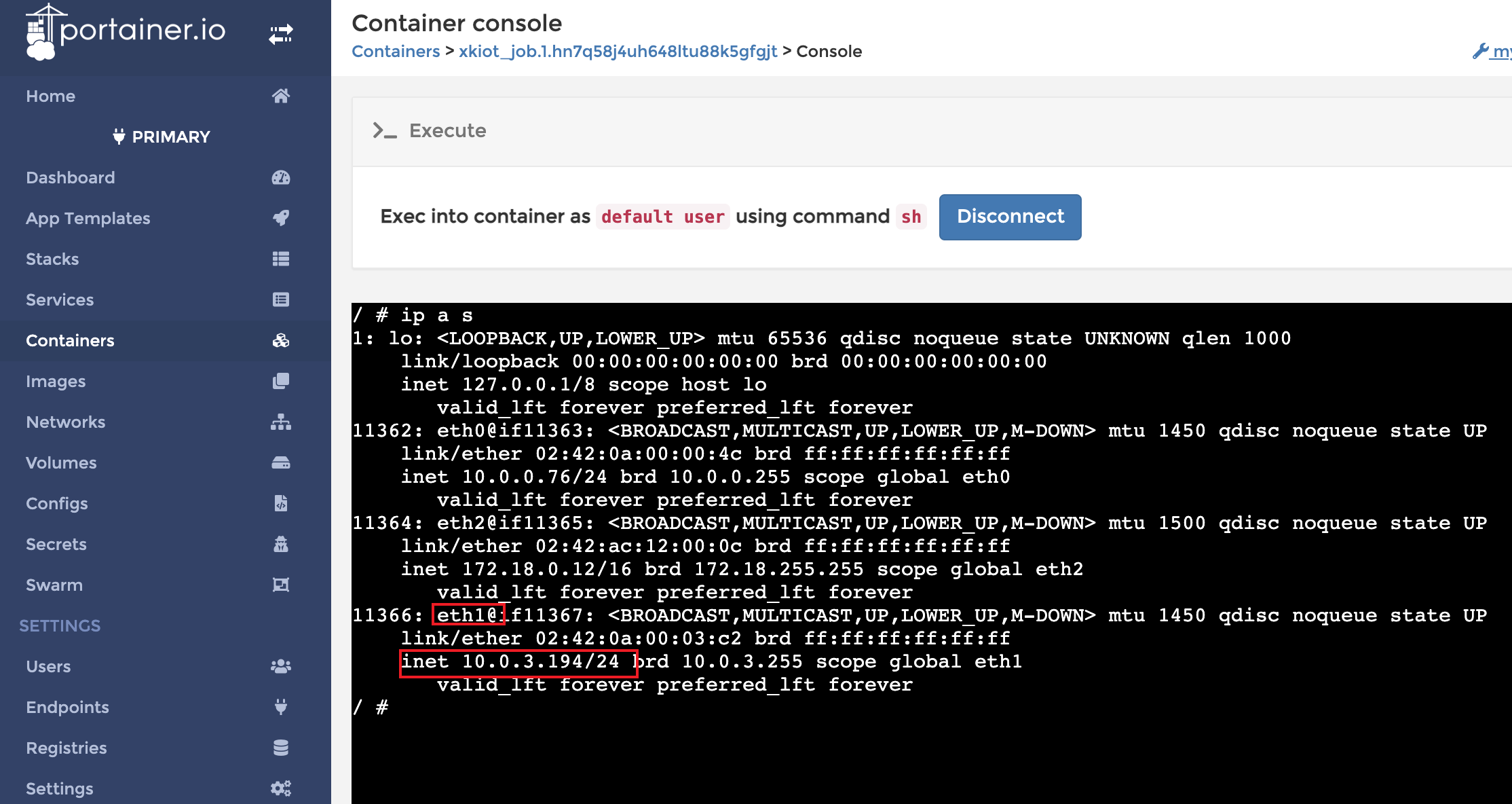

在用nacos做为注册中心和配置中心时,如果部署模式是docker swarm模式时,由于容器内部多个网卡,默认随机eth0,就会导致这个ip是内部ip导致无法访问其他容器的服务

先看stack的网络,从下图可以看到用的网络是10.0.3开头的

因此可以进行设置优先网络

1 | environment: |

或者进入容器进行忽略网卡的设置,可以看到需要忽略到eth0,和eth2,只剩下需要的

因此配置参数如下:

1 | - spring.cloud.inetutils.ignored-interfaces=eth0.*,eth2.* |

更多详细的配置见Appendix A: Common application properties

测试网络的互访可以通过springcloud的心跳

1 | wget http://10.0.3.194:9200/actuator/health -q -O - |

发现项目里面的redis缓存与数据库的数据混乱不一致,因为很多自定义的数据库update方法更新了数据库,但是并没有更新redis,于是想在底层实现自动缓存

引入依赖

1 | compile group: 'org.springframework.boot', name: 'spring-boot-starter-cache', version: '2.1.1.RELEASE' |

添加redis缓存的中间件,缓存的中间件也可以不用redis用其他中间件一样的,可选generic,ehcache,hazelcast,infinispan,jcache,redis,guava,simple,none

1 | spring.redis.host=gt163.cn |

开启cache功能,在@SpringBootApplication启动类或@Configuration配置类上面添加该注解@EnableCaching

使用缓存功能,在要缓存的方法上面或者类上面添加注解@Cacheable("<redis里面的唯一key,也可以叫表名>")

1 | //例如 |

1 | //开启缓存功能 |

详细代码见github:iexxk/springLeaning:mongo

在BaseDao接口层添加缓存注解,然后在各个子类继承实现,达到通用缓存框架的配置

BaseDao.java

1 | @CacheConfig(cacheNames = {"mongo"}) |

BaseDaoImpl.java

1 | public class BaseDaoImpl<T, ID> implements BaseDao<T, ID> { |

下面开始进行使用,新建一个UserDao.jva

1 | public interface UserDao extends BaseDao<User, String> { |

UserDaoImpl.jva

1 | @Repository |

最好调用findById就会进行缓存了

因为cacheNames也就是表名不支持SpEL,因此获取不到表名,因此设计是,表就用通用mongo字段做完通用表,然后key里面才是表加id的设计,因此也导致了deletAll是删除所有的表,因为deletAll基本不会用到,也还可以接受,就算用到了,只是缓存没了,还是能从数据库重建缓存

参考SpringCache扩展@CacheEvict的key模糊匹配清除

新建个该文件CustomizedRedisCacheManager.java

1 | public class CustomizedRedisCacheManager extends RedisCacheManager { |

新建CustomizedRedisCache.java

1 | public class CustomizedRedisCache extends RedisCache { |

添加配置CachingConfig.java,指定自定义的缓存类

1 | @Configuration |

最后再修改使用@CacheEvict就支持*号模糊删除了

1 | //删除table开头的所有key |

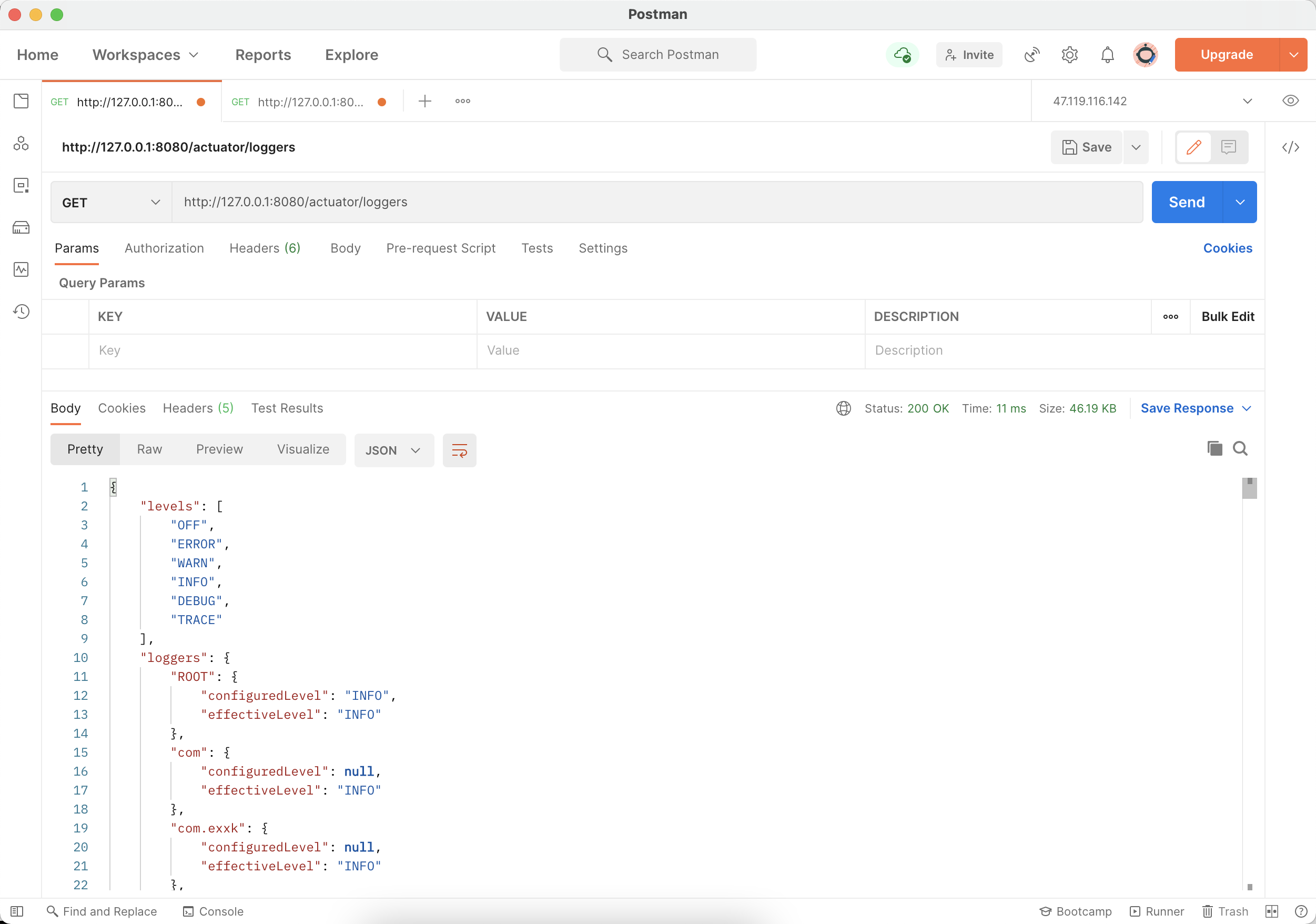

在项目运行时,偶尔需要排查问题,需要看日志信息,但是平常只开了info级别的,对于调试找问题不是很方便,所以需要改日志重启,这里在不重启的情况下修改springboot的日志级别

spring-boot-starter-actuator 是监控springboot的健康情况的一个依赖工具包

包含三类功能

引入依赖

1 | implementation 'org.springframework.boot:spring-boot-starter-actuator' |

配置loggers接口,这里分别开了三个接口/actuator/loggers、/actuator/info、/actuator/health

1 | management.endpoints.web.exposure.include=loggers,health,info |

访问GET /actuator/loggers就可以得到所有包的日志级别了

查询特定包的日志级别GET /actuator/loggers/<package path>

1 | # GET /actuator/loggers/com.exxk.adminClient |

修改特定包的日志级别POST /actuator/loggers/<package path>然后添加 BODY JSON 内容{"configuredLevel": "DEBUG"},请求成功后对应包的日志级别就改变了,访问就会输出设置的日志级别的日志了

1 | # POST /actuator/loggers/com.exxk.adminClient |

引入依赖,注意版本号要和spring boot的版本一致,不然启动会报错

1 | // https://mvnrepository.com/artifact/de.codecentric/spring-boot-admin-starter-server |

在启动类上面添加注解@EnableAdminServer

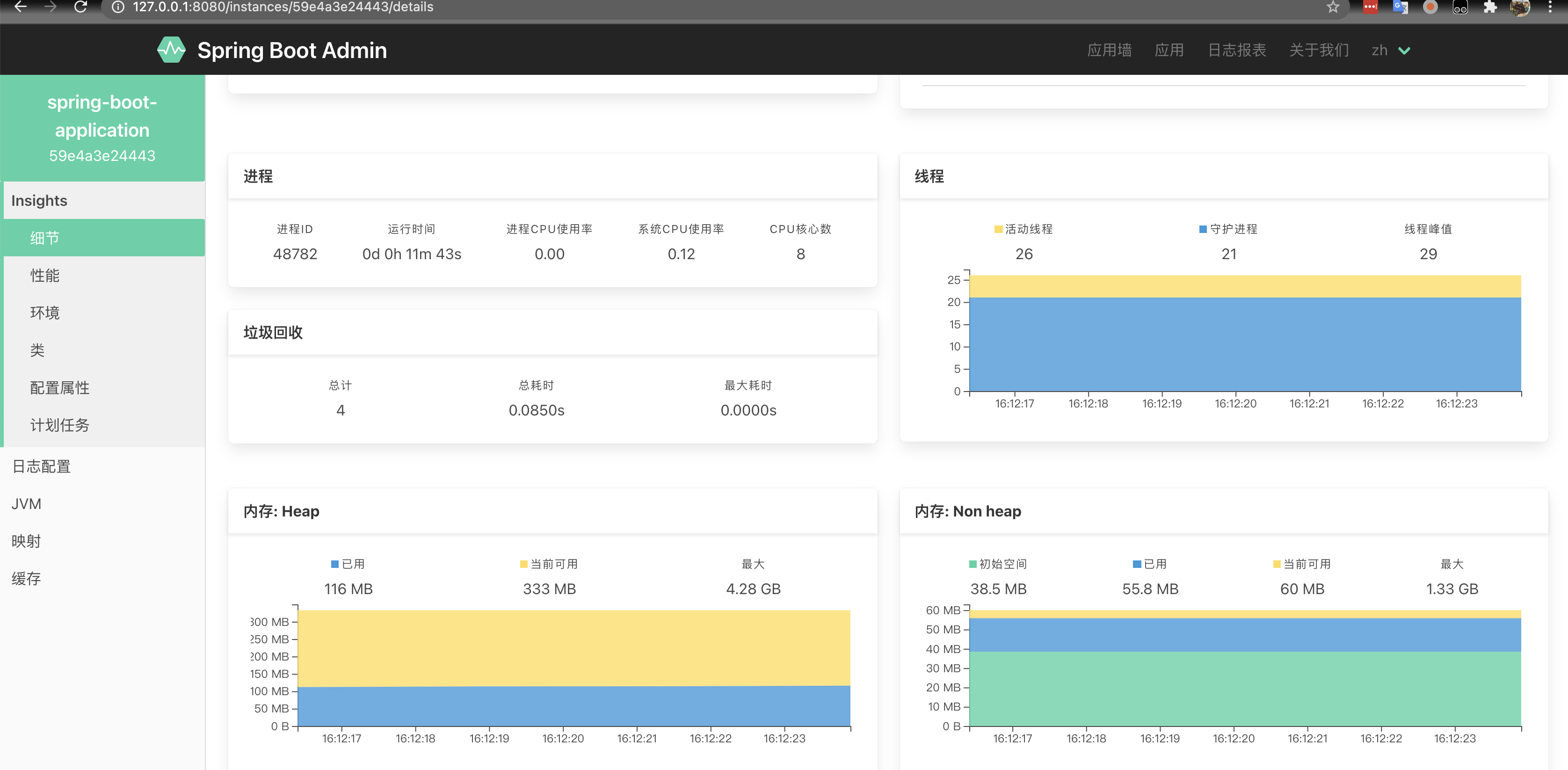

运行,然后访问http://127.0.0.1:8080

添加依赖

1 | // https://mvnrepository.com/artifact/de.codecentric/spring-boot-admin-server-ui-login |

添加Spring Security配置

1 | @Configuration(proxyBeanMethods = false) |

在配置文件设置密码

1 | spring.boot.admin.client.username=admin |

直接采用官方镜像codecentric/spring-boot-admin运行

1 | docker run -d \ |

然后访问http://本地ip或映射的外网ip:8080

添加依赖

1 | implementation group: 'de.codecentric', name: 'spring-boot-admin-starter-client', version: '2.2.2' |

添加配置

1 | spring.boot.admin.client.url=http://localhost:8080 |

启动运行,就可以看到该springboot已经注册到了admin server里面去了,可以去日志配置界面动态修改日志级别了

添加依赖

1 | <!-- 大版本号2.7.x要和服务端2.7.x一致 --> |

添加springboot配置

1 | spring: |

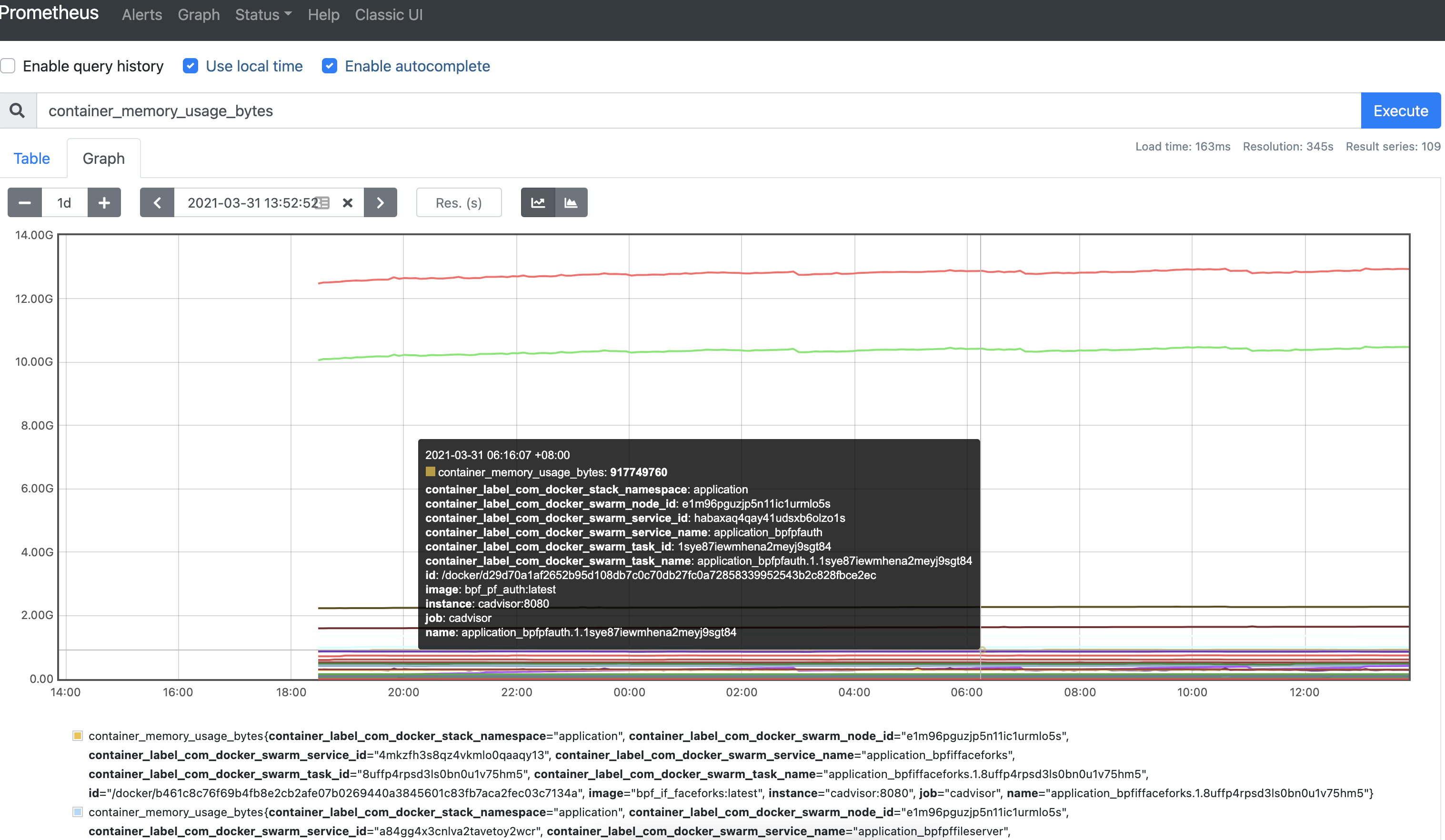

/actuator/httptrace网络接口追踪404,解决建议用Sleuth在docker部署多个微服务后,发现宿主机内存不断的慢慢上涨,因此想知道是哪个微服务慢慢不断让内存上涨,因此想用一个监控软件,监控所有微服务的性能等指标

资源占用

prometheus的配置文件/docker_data/v-monitor/prometheus/prometheus.yml内容如下:

1 | scrape_configs: |

Swarm部署脚本

1 | version: '3.2' |

| 指标名称 | 类型 | 含义 |

|---|---|---|

| container_memory_usage_bytes | gauge | 容器当前的内存使用量(单位:字节) |

| machine_memory_bytes | gauge | 宿主机内存总量(单位:字节) |

1 | grafana: |

默认账号和密码是admin/admin

官方配置手册GRAFANA SUPPORT FOR PROMETHEUS

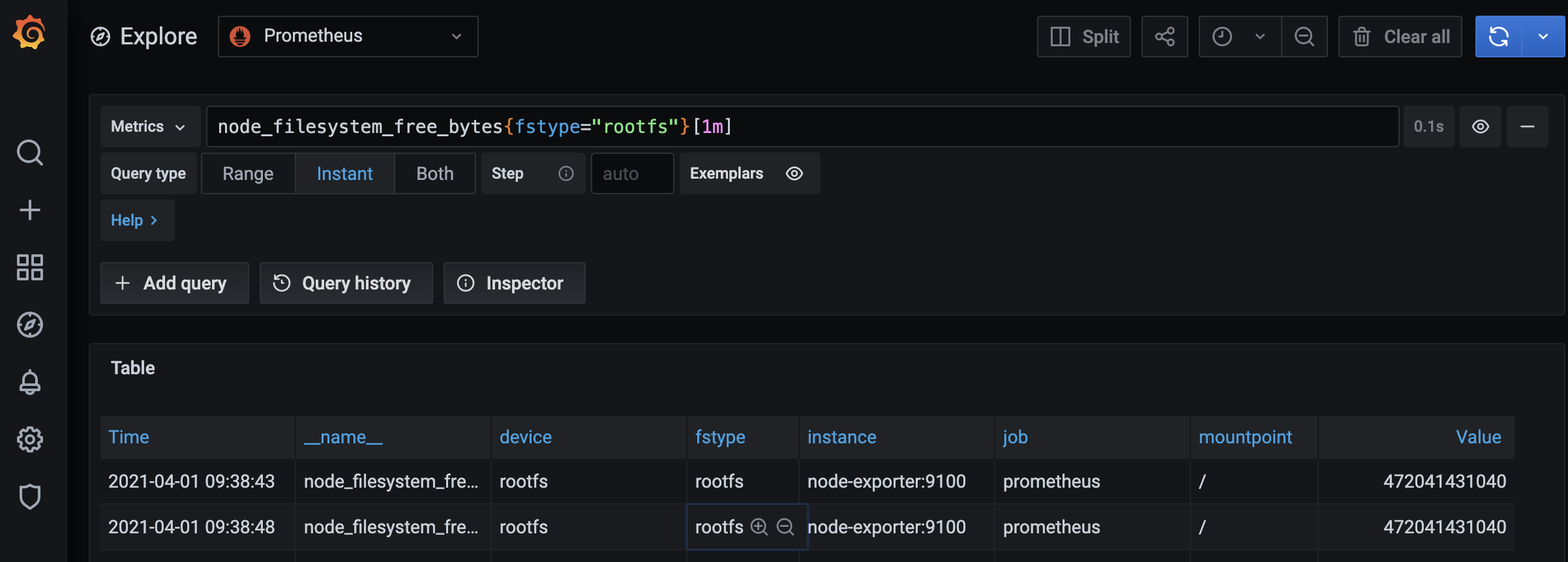

增加部署

1 | node-exporter: |

修改配置prometheus.yml内容如下:

1 | scrape_configs: |

1 | #node_filesystem_free_bytes代表查询的表名,{fstype="rootfs"}相当于查询条件,查询fstype是rootfs的所有数据,[1m]范围向量,一分钟内的数据 |

1 | #name是容器名字 |

待更新…

_bytes后缀,找到升级后的表名替换旧的就可以了Only queries that return single series/table is supported错误,检查右边的panel是否需要合并,不需要合并应该会选中一个图表类型完整的swarm部署文件

1 | version: '3.2' |

1 | scrape_configs: |

1 | #基础镜像选择alpine 小巧安全流行方便 |

1 | HEALTHCHECK --start-period=40s --interval=30s --timeout=5s --retries=5 CMD (wget http://localhost:9303/actuator/health -q -O -) | grep UP || exit 1 |

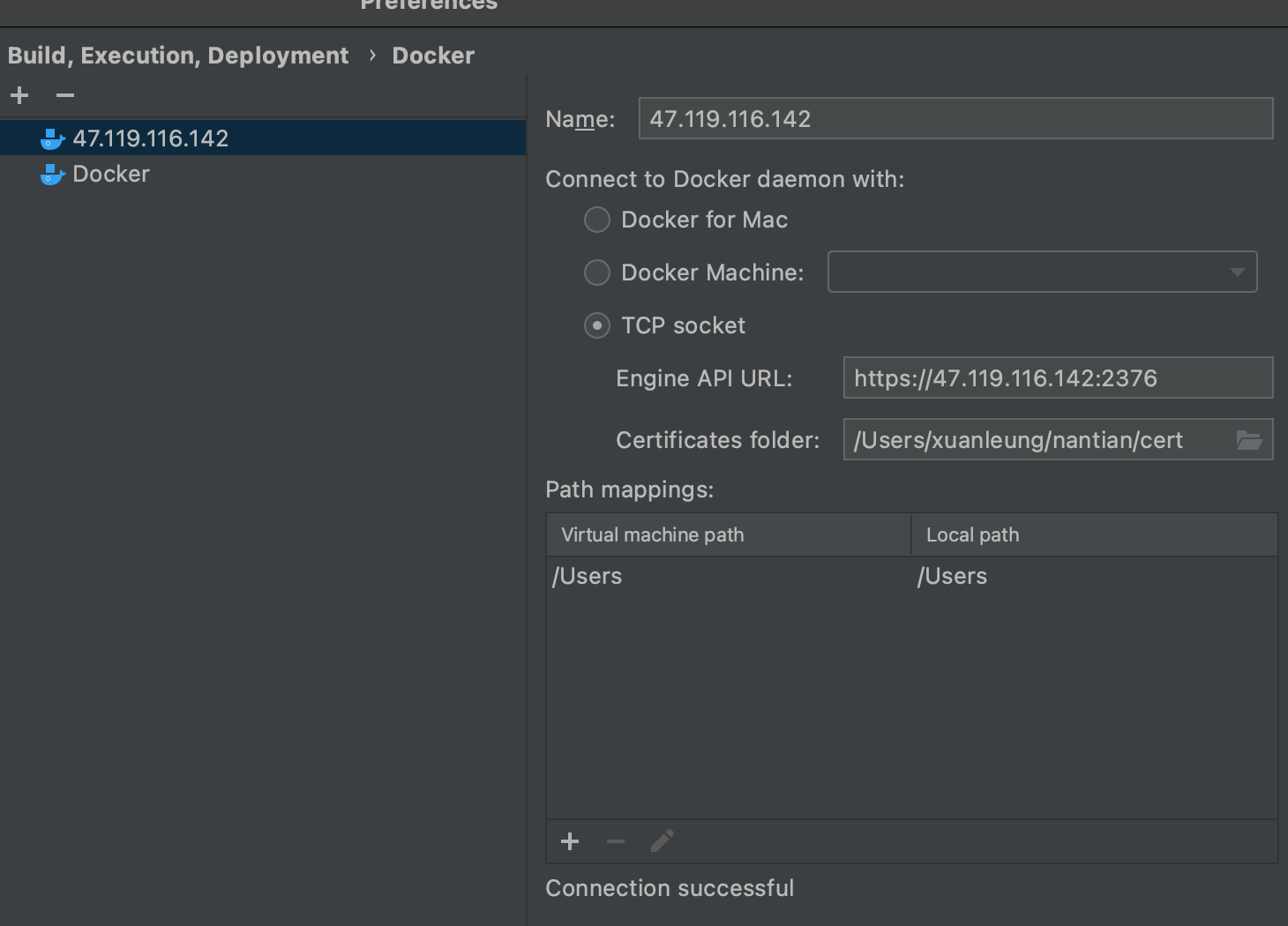

官方设置Protect the Docker daemon socket

tls(https)安全连接是通过证书进行验证,因为连接协议是https,所以连接的时候端口变成了2376

阿里云开放端口: 2376

1 | #服务端需要的文件 |

1 | mkdir /docker_data/cert/ |

修改vim /etc/docker/daemon.json文件

1 | { |

配置完成后重启docker

1 | systemctl daemon-reload |

1 | #服务端测试 |

拷贝ca.pem 、cert.pem、key.pem 三个文件到cert目录,然后idea指向cert目录,url用https://ip:2376

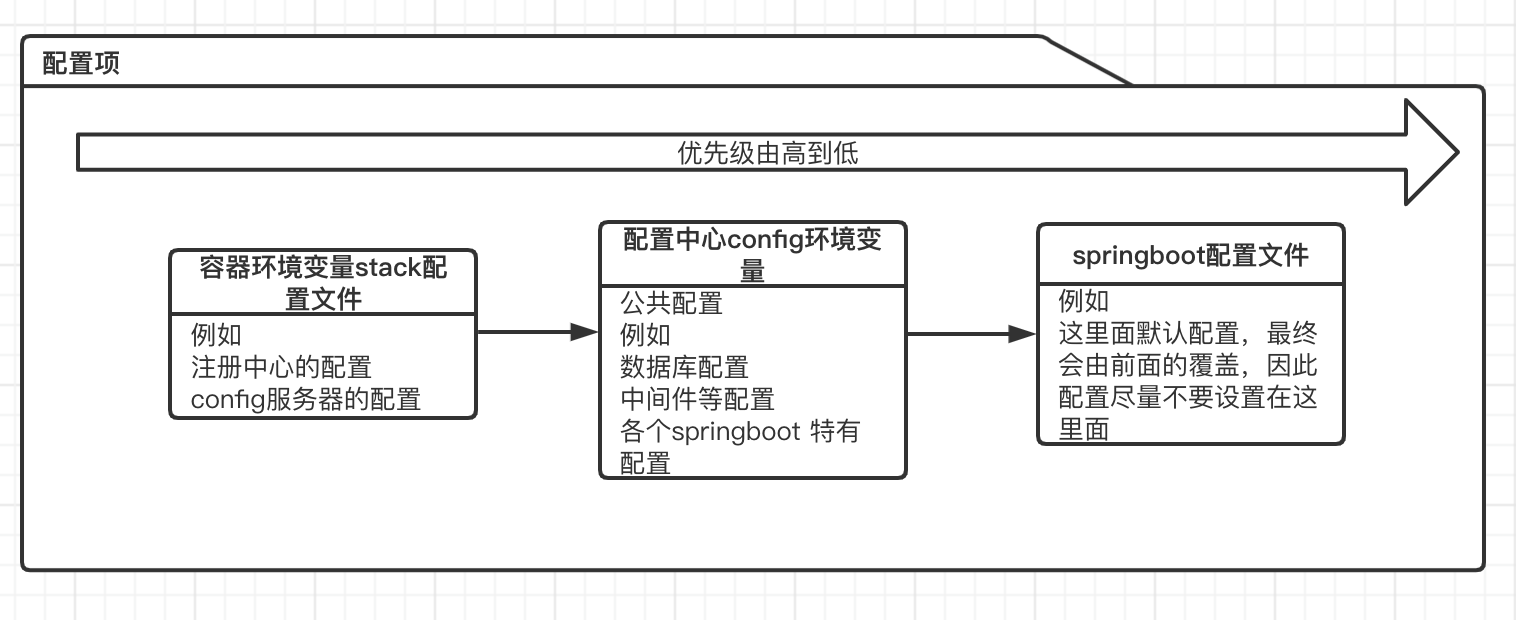

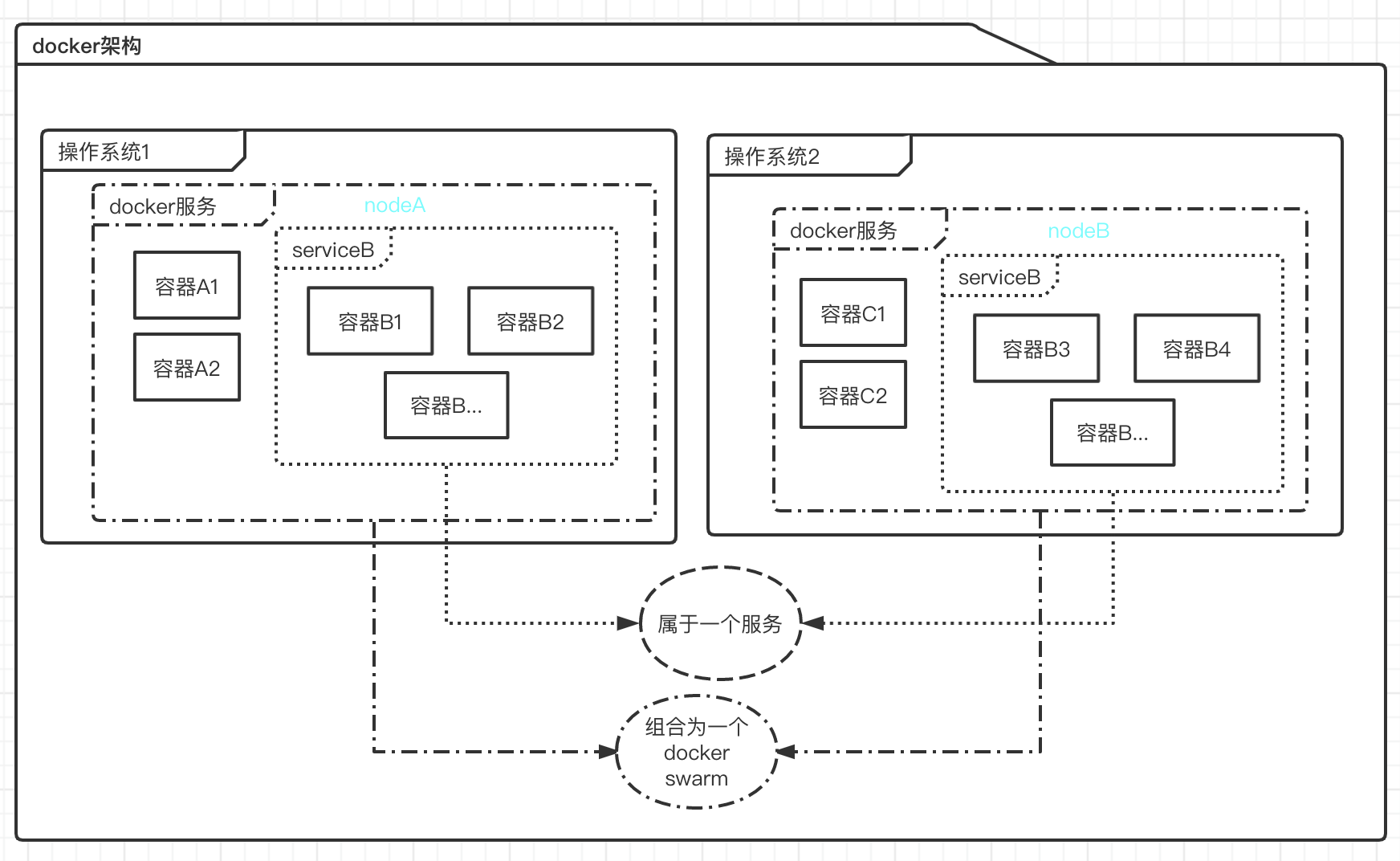

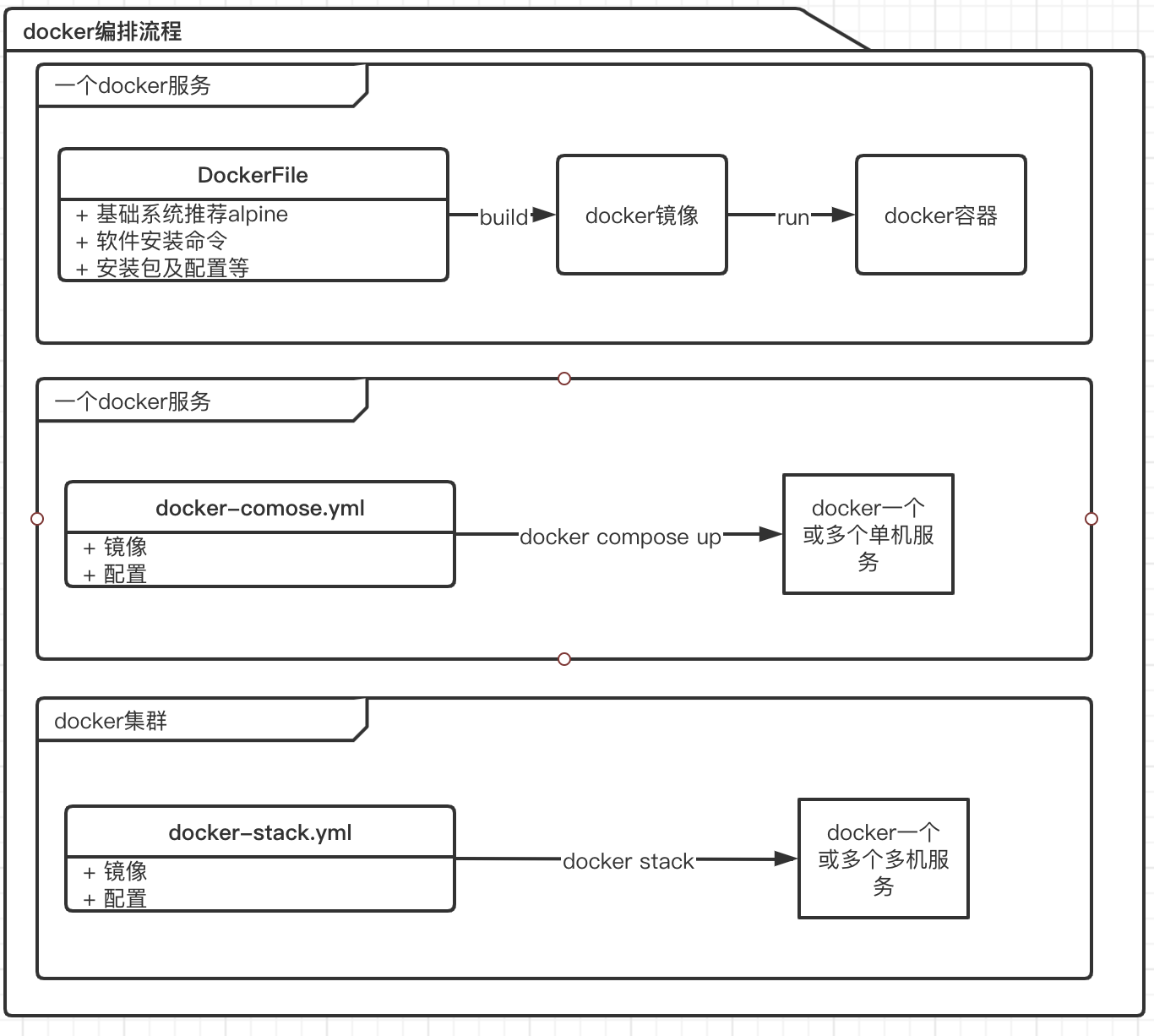

下面简单解释下名词的含义,后面会详细介绍:

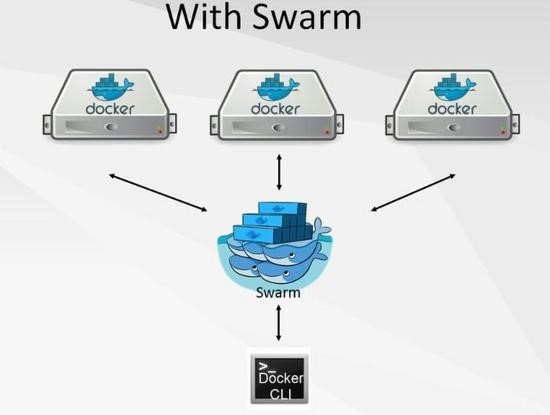

docker swarm服务关系

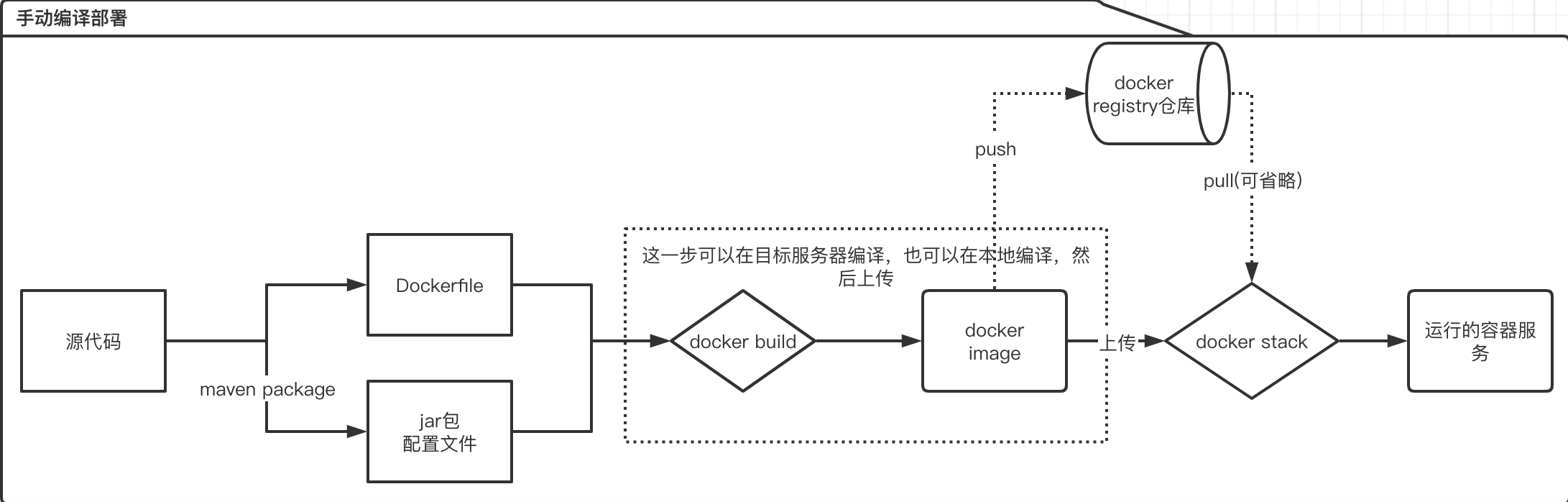

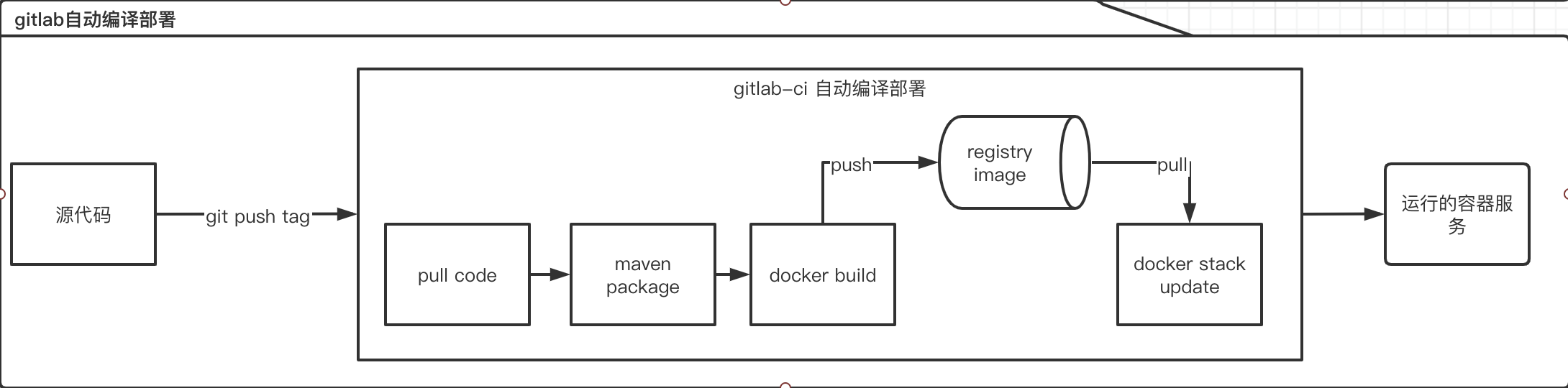

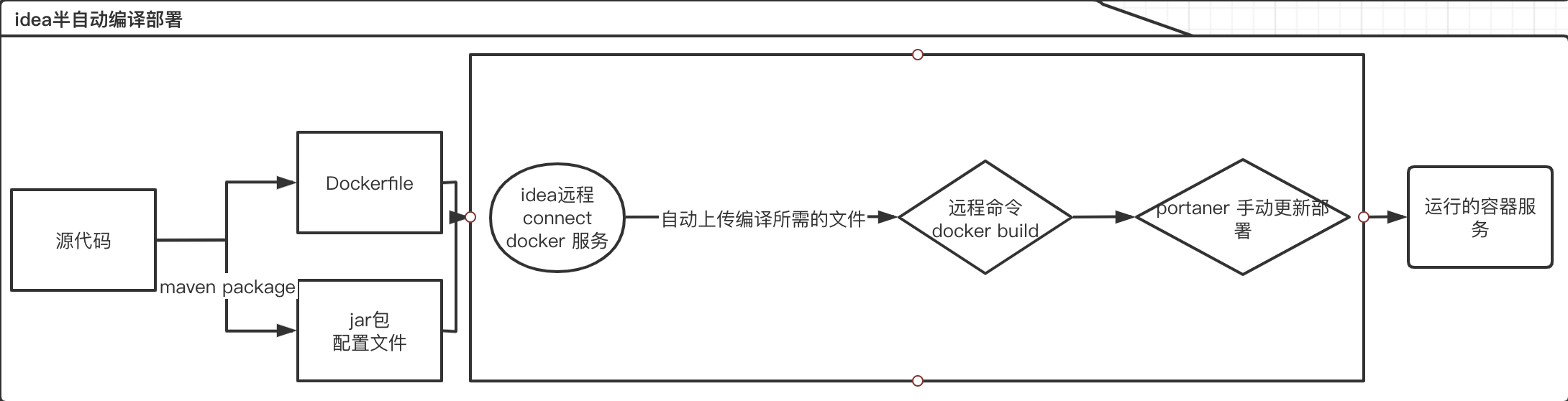

docker编排部署流程

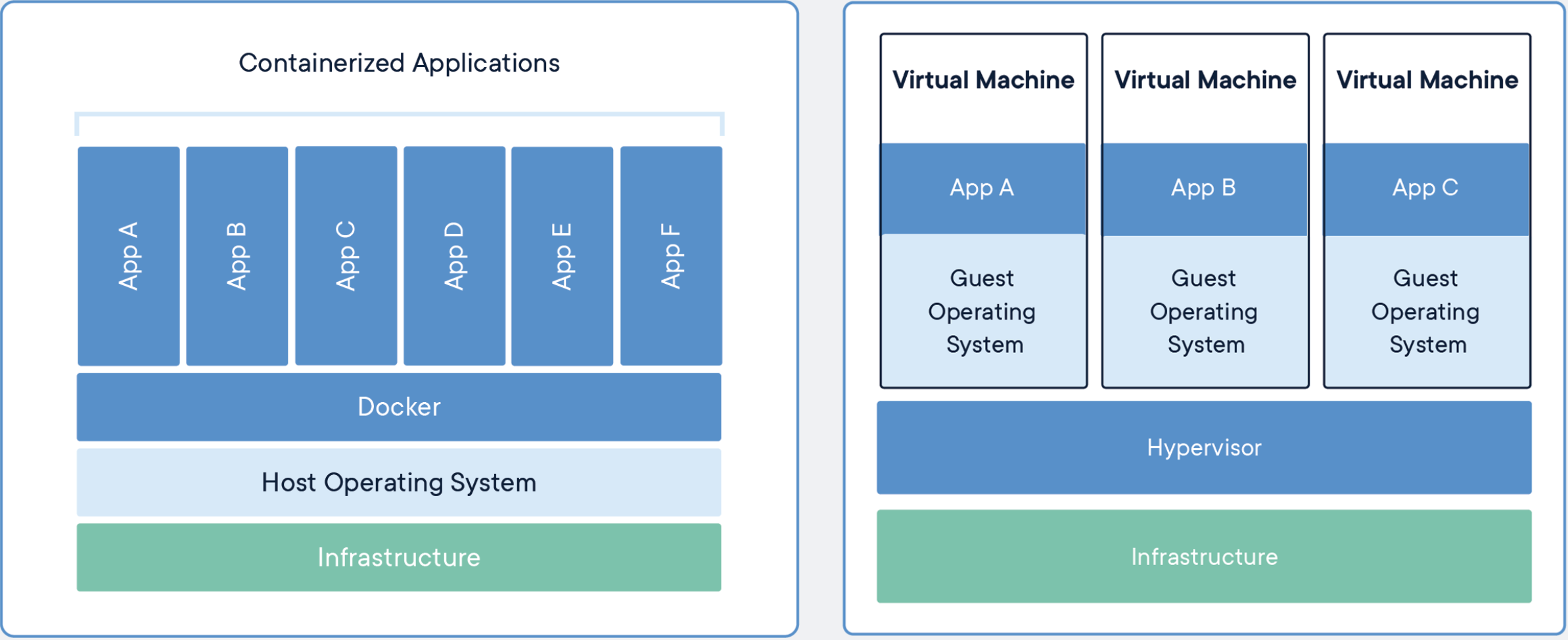

容器是打包代码及其所有依赖项的软件的标准单元,因此应用程序可以从一个计算环境快速可靠地运行到另一个计算环境。Docker容器映像是一个轻量级的,独立的,可执行的软件软件包,其中包含运行应用程序所需的一切:代码,运行时,系统工具,系统库和设置。

容器虚化的是操作系统而不是硬件,容器更加便携和高效

1 | sudo yum install -y yum-utils |

1 | # 镜像编排命令 |

dockerfile常见命令说明

1 | #基础镜像选择alpine 小巧安全流行方便 |

springboot 支持系统环境变量

docker 支持容器环境变量设置

设计原理,不改变基础镜像的情况下,适应不同的环境

1 | 优先级由高到低 |