kuboard部署web项目(手动版)

创建harbor仓库

本地编译web项目

添加

Dockerfile到项目根目录1

2

3

4FROM nginx:alpine

RUN rm /etc/nginx/conf.d/default.conf

ADD default.conf /etc/nginx/conf.d/

COPY build/ /usr/share/nginx/html/添加nginx的配置文件

default.conf到项目根目录1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35# 后端接口代理地址,部署在一个命名空间,通过service模式访问,在这里配置了就可以不用配置外网访问的ip

upstream api_server {

#后端服务的server名

server backend-application:8080;

}

server {

listen 80;

server_name localhost;

underscores_in_headers on;

location / {

root /usr/share/nginx/html;

index index.html index.htm;

#解决浏览器地址栏回车,报nginx错误

try_files $uri $uri/ /index.html =404;

}

#后端是统一api开头的接口,api可以根据自己的项目修改为其他统一路径

location /api/ {

rewrite ~/api/(.*)$ /$1 break;

proxy_pass http://api_server/api/;

proxy_set_header Host $host;

proxy_pass_request_headers on;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_read_timeout 300;

proxy_send_timeout 300;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}编译镜像,在项目根目录执行

docker build --no-cache=true --build-arg JAR_FILE='*' -f Dockerfile -t harbor.iexxk.dev/base/test_web:latest .推送镜像,在项目根目录执行

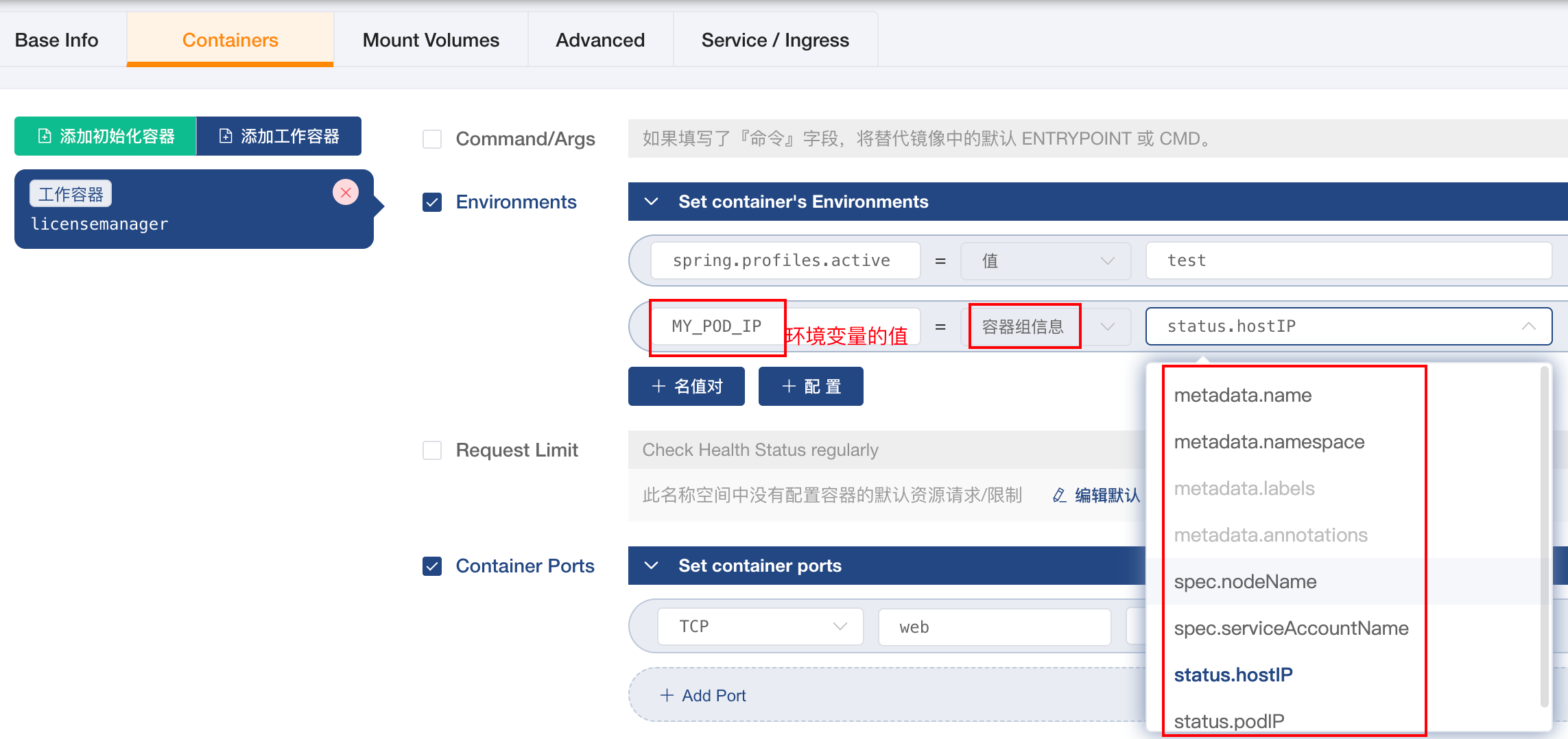

docker push harbor.iexxk.dev/base/test_web:lates在kuboard上面创建deployment部署。

配置ingress,配置一个自定义域名映射到前端80的服务上。

总结:

到此就配置结束了,通过自定义域名即可访问前端,后端通过自定义域名加/api就可以通过前端部署时用的nginx进行转发。

疑问:在我的印象你前端需要指定后端接口地址,但是不知前端做了什么操作,没有指定,部署上直接访问的接口就是域名加api的模式访问。